基于Q-Learning 的FlappyBird AI

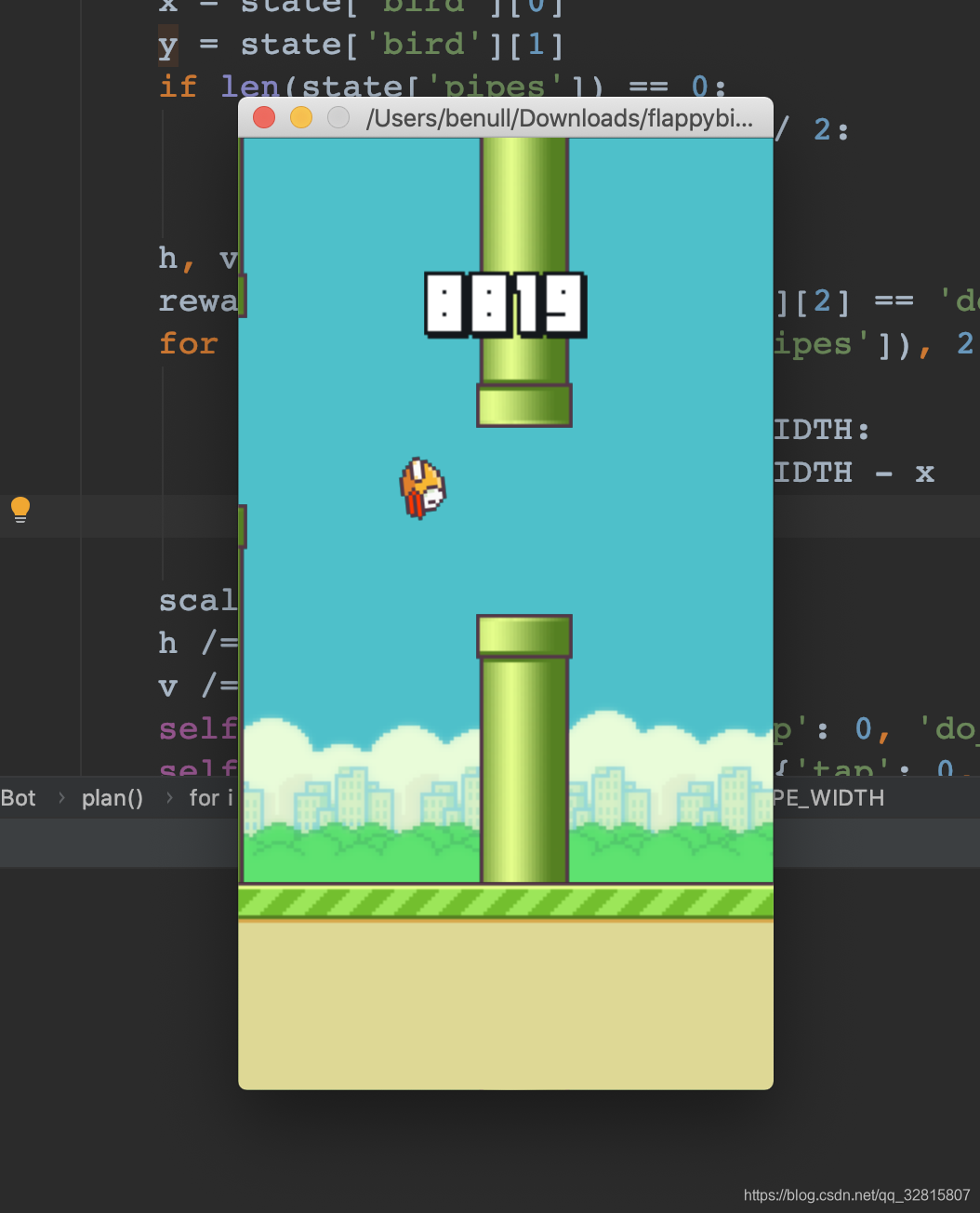

在birdbot实现的FlappyBird基础上训练AI,这个FlappyBird的实现对游戏进行了简单的封装,可以很方便得到游戏的状态来辅助算法实现。同时可以显示游戏界面方便调试,能够看到算法实现的效果。也可以选择关闭游戏界面以及声音,这样游戏仍然能正常运行,一般用于训练阶段,可以减少CPU的占用

实现参考的是SarvagyaVaish的Flappy Bird RL

Q-Learning

Q-Learning是强化学习算法中value-based的算法

Q即为Q(s,a)就是在某一时刻的 s 状态下(s∈S),采取 动作a (a∈A)动作能够获得收益的期望,环境会根据agent的动作反馈相应的回报reward,所以算法的主要思想就是将State与Action构建成一张Q-table来存储Q值,然后根据Q值来选取能够获得最大的收益的动作

| Q-Table | a1 | a2 |

|---|---|---|

| s1 | q(s1,a1) | q(s1,a2) |

| s2 | q(s2,a1) | q(s2,a2) |

| s3 | q(s3,a1) | q(s3,a2) |

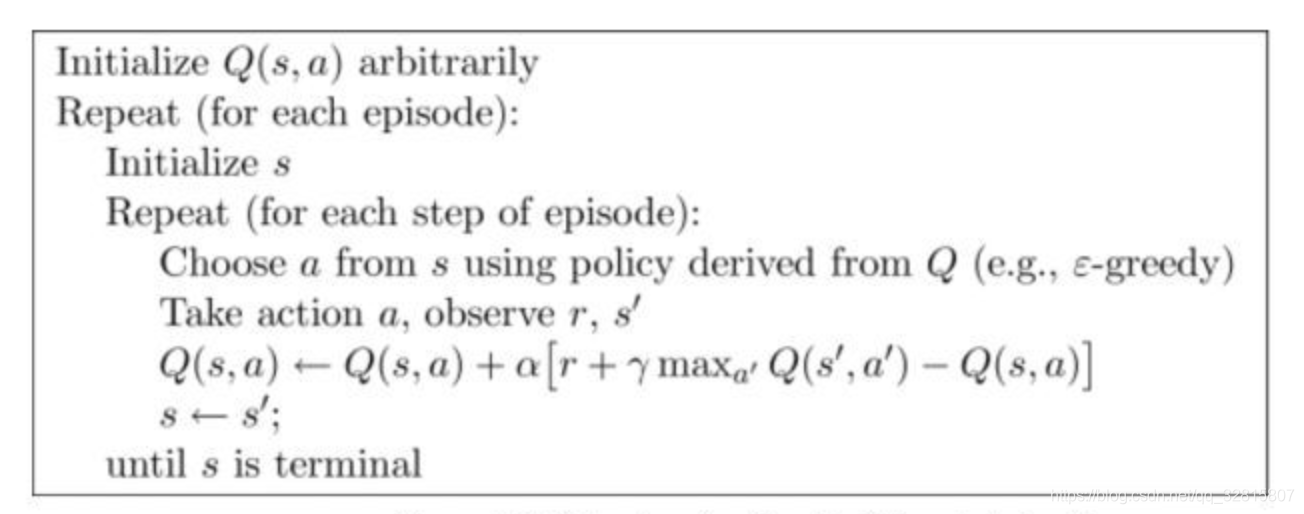

算法流程

在更新的过程中,引入了学习速率alpha,控制先前的Q值和新的Q值之间有多少差异被保留

γ为折扣因子,0<= γ<1,γ=0表示立即回报,γ趋于1表示将来回报,γ决定时间的远近对回报的影响程度

详细的Q-Learning过程可以参考下面这篇

A Painless Q-learning Tutorial (一个 Q-learning 算法的简明教程)

FlappyBird中应用

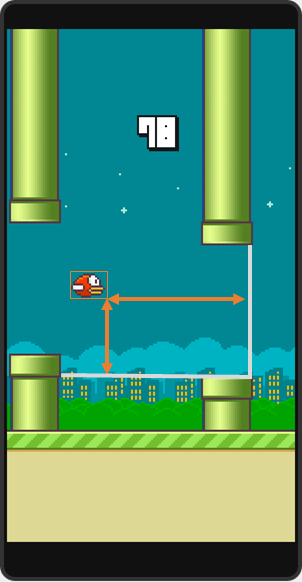

状态空间

- 从下方管子开始算起的垂直距离

- 从下一对管子算起的水平距离

- 鸟:死或生

动作

每一个状态,有两个可能的动作

- 点击一下

- 啥也不干

奖励

奖励的机制完全基于鸟是否存活

- +1,如果小鸟还活着

- -1000,如果小鸟死了

流程

伪代码

1 | 初始化 Q = {}; |

观察Flappy Bird处于什么状态,并执行最大化预期奖励的行动。然后继续运行游戏,接着获得下一个状态s’

观察新的状态s’和与之相关的奖励:+1或者-1000

根据Q Learning规则更新Q阵列

Q[s,a] ← Q[s,a] + α (r + γ*V(s’) - Q[s,a])

设定当前状态为s’,然后重新来过

代码

1 | import pyglet |

全部代码见github仓库